3. Descriptive Statistics: Central Tendency and Dispersion

3.2 Dispersion: Variance and Standard Deviation

Variance, and its square root standard deviation, measure how “wide” or “spread out” a data distribution is. We begin by using the formula definitions; they are slightly different for populations and samples.

1. Population Formulae :

Variance :

(3.3) ![]()

where ![]() is the size of the population,

is the size of the population, ![]() is the mean of the population and

is the mean of the population and ![]() is an individual value from the population.

is an individual value from the population.

Standard Deviation :

![]()

The standard deviation, ![]() , is a population parameter, we will learn about how to make inferences about population parameters using statistics from samples.

, is a population parameter, we will learn about how to make inferences about population parameters using statistics from samples.

2. Sample Formulae :

Variance :

(3.4) ![]()

where ![]() = sample size (number of data points),

= sample size (number of data points), ![]() = degrees of freedom for the given sample,

= degrees of freedom for the given sample, ![]() and

and ![]() is a data value.

is a data value.

Standard Deviation :

![]()

Equations (3.3) and (3.4) are the definitions of variance as the second moment about the mean; you need to determine the means (![]() or

or ![]() ) before you can compute variance with those formulae. They are algebraically equivalent to a “short cut” formula that allow you to compute the variance directly from sums and sums of squares of the data without computing the mean first. For the sample standard deviation (the useful one) the short cut formula is

) before you can compute variance with those formulae. They are algebraically equivalent to a “short cut” formula that allow you to compute the variance directly from sums and sums of squares of the data without computing the mean first. For the sample standard deviation (the useful one) the short cut formula is

(3.5) ![]()

At this point you should figure out how to compute ![]() ,

, ![]() and

and ![]() on your calculator for a given set of data.

on your calculator for a given set of data.

Fact (not proved here) : The sample standard deviation ![]() is the “optimal unbiased estimate” of the population standard deviation

is the “optimal unbiased estimate” of the population standard deviation ![]() .

. ![]() is a statistic”, the best statistic it turns out, that is used to estimate the population parameter

is a statistic”, the best statistic it turns out, that is used to estimate the population parameter ![]() . It is the

. It is the ![]() in the denominator that makes

in the denominator that makes ![]() the optimal unbiased estimator of

the optimal unbiased estimator of ![]() . We won’t prove that here but we will try and build up a little intuition about what that should be so — why dividing by

. We won’t prove that here but we will try and build up a little intuition about what that should be so — why dividing by ![]() should be better than dividing by

should be better than dividing by ![]() . (

. (![]() is known as the degrees of freedom of the estimator

is known as the degrees of freedom of the estimator ![]() ). First notice that you can’t guess or estimate a value for

). First notice that you can’t guess or estimate a value for ![]() (i.e. compute

(i.e. compute ![]() ) with only one data point. There is no spread of values in a data set of one point! This is part of the reason why the degrees of freedom is

) with only one data point. There is no spread of values in a data set of one point! This is part of the reason why the degrees of freedom is ![]() and not

and not ![]() . A more direct reason is that you need to remove one piece of information (the mean) from your sample before you can guess

. A more direct reason is that you need to remove one piece of information (the mean) from your sample before you can guess ![]() (compute

(compute ![]() ).

).

Coefficient of Variation

The coefficient of variation, CVar, is a “normalized” measure of data spread. It will not be useful for any inferential statistics that we will be doing. It is a pure descriptive statistic. As such it can be useful as a dependent variable but we treat it here as a descriptive statistic that combines the mean and standard deviation. The definition is :

![]()

![]()

Example 3.9 : In this example we take the data given in the following table as representing the whole population of size ![]() . So we use the formula of Equation (3.3) which requires us to sum

. So we use the formula of Equation (3.3) which requires us to sum ![]() .

.

| 10 | |

| 60 | |

| 50 | |

| 30 | |

| 40 | |

| 20 | |

Using the sum in the first column we compute the mean :

![]()

Then with that mean we compute the quantities in the second (calculation) column above and sum them. And then we may compute the variance :

![]()

and standard deviation

![]()

Finally, because we can, we compute the coefficient of variation:

![]()

◻

Example 3.10 : In this example, we have a sample. This is the usual circumstance under which we would compute variance and sample standard deviation. We can use either Equation (3.4) or (3.5). Using Equation (3.4) follows the sample procedure that is given in Example 3.9 and we’ll leave that as an exercise. Below we’ll apply the short-cut formula and see how ![]() may be computed without knowing

may be computed without knowing ![]() . The dataset is given in the table below in the column to the left of the double line. The columns to the right of the double line are, as usual, our calculation columns. The size of the sample is

. The dataset is given in the table below in the column to the left of the double line. The columns to the right of the double line are, as usual, our calculation columns. The size of the sample is ![]() .

.

| 11.2 | ||

| 11.9 | ||

| 12.0 | exercise | |

| 12.8 | ||

| 13.4 | ||

| 14.3 | ||

To find ![]() compute

compute

![]()

So

![]()

Note that ![]() is never negative! If it were then you couldn’t take the square root to find

is never negative! If it were then you couldn’t take the square root to find ![]() . Also not that we have not yet determined the mean. We can do that now:

. Also not that we have not yet determined the mean. We can do that now:

![]()

And with the mean we can then compute

![]()

◻

Grouped Sample Formula for Variance

As with the mean, we can compute an approximation of the data variance from frequency table, histogram, data. And again this computation is precise for probability distributions with class widths of one. The grouped sample formula for variance is

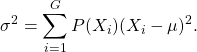

(3.6) ![]()

where ![]() is the number of groups or classes,

is the number of groups or classes, ![]() is the class center of group

is the class center of group ![]() ,

, ![]() is the frequency of group

is the frequency of group ![]() and

and

![Rendered by QuickLaTeX.com \[n = \sum_{i=1}^{G} f_{i}\]](https://openpress.usask.ca/app/uploads/quicklatex/quicklatex.com-cbd1190eefba16879960bc01cd4a6b48_l3.png)

is the sample size. Equation (3.6) the short-cut version of the formula. We can also write

![]()

or if we are dealing with a population, and the class width is one so that the class center ![]() ,

,

![]()

which will be useful when we talk about probability distributions. In fact, let’s look ahead a bit and make the frequentist definition for the probability for ![]() as

as ![]() (which is the relative frequency of class

(which is the relative frequency of class ![]() ) so that

) so that

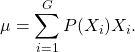

(3.7)

If we make the same substitution ![]() in the grouped mean formula, Equation (3.1) with population items

in the grouped mean formula, Equation (3.1) with population items ![]() and

and ![]() in place of the sample items

in place of the sample items ![]() and

and ![]() , then it becomes

, then it becomes

(3.8)

More on probability distributions later, for now let’s see how we use Equation (3.6) for frequency table data.

Example 3.11 : Given the frequency table data to the left of the double dividing line in the table below, compute the variance and standard deviation of the data using the grouped data formula.

| Class | Class Boundaries | Freq, |

Class Centre |

|||

| 1 | 5.5 – 10.5 | 1 | 8 | |||

| 2 | 10.5 – 15.5 | 2 | 13 | |||

| 3 | 15.5 – 20.5 | 3 | 18 | |||

| 4 | 20.5 – 25.5 | 5 | 23 | |||

| 5 | 25.5 – 30.5 | 4 | 28 | |||

| 6 | 30.5 – 35.5 | 3 | 33 | |||

| 7 | 35.5 – 40.5 | 2 | 38 | |||

The formula

![]()

tells us that we need the sums of ![]() and

and ![]() after we compute the class centres

after we compute the class centres ![]() and their squares

and their squares ![]() — these calculations we do in the columns added to the right of the double bar in the table above. With the sums we compute

— these calculations we do in the columns added to the right of the double bar in the table above. With the sums we compute

![]()

So

![]()

The mean, from one of the sums already finished is

![]()

and the coefficient of variation is

![]()

◻

Now is a good time to figure out how to compute ![]() and

and ![]() (and

(and ![]() ) on your calculators.

) on your calculators.