14. Correlation and Regression

14.5 Linear Regression

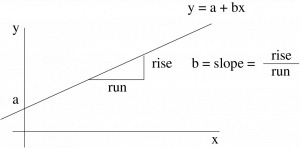

Linear regression gives us the best equation of a line through the scatter plot data in terms of least squares. Let’s begin with the equation of a line:

![]()

where ![]() is the intercept and

is the intercept and ![]() is the slope.

is the slope.

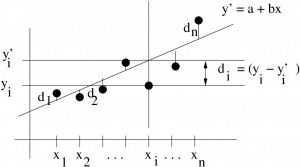

The data, the collection of ![]() points, rarely lie on a perfect straight line in a scatter plot. So we write

points, rarely lie on a perfect straight line in a scatter plot. So we write

![]()

as the equation of the best fit line. The quantity ![]() is the predicted value of

is the predicted value of ![]() (predicted from the value of

(predicted from the value of ![]() ) and

) and ![]() is the measured value of

is the measured value of ![]() . Now consider :

. Now consider :

The difference between the measured and predicted value at data point ![]() ,

, ![]() , is the deviation. The quantity

, is the deviation. The quantity

![]()

is the squared deviation. The sum of the squared deviations is

![]()

The least squares solution for ![]() and

and ![]() is the solution that minimizes

is the solution that minimizes ![]() , the sum of squares, over all possible selections of

, the sum of squares, over all possible selections of ![]() and

and ![]() . Minimization problems are easily handled with differential calculus by solving the differential equations:

. Minimization problems are easily handled with differential calculus by solving the differential equations:

![]()

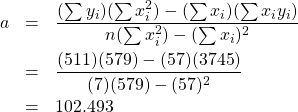

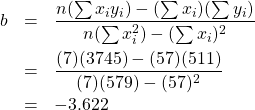

The solution to those two differential equations is

![]()

and

![]()

Example 14.3 : Continue with the data from Example 14.1 and find the best fit line. The data again are:

| Subject | |||||

| A | 6 | 82 | 492 | 36 | 6724 |

| B | 2 | 86 | 172 | 4 | 7396 |

| C | 15 | 43 | 645 | 225 | 1849 |

| D | 9 | 74 | 666 | 81 | 5476 |

| E | 12 | 58 | 696 | 144 | 3364 |

| F | 5 | 90 | 450 | 25 | 8100 |

| G | 8 | 78 | 624 | 64 | 6084 |

Using the sums of the columns, compute:

and

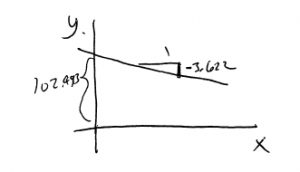

So

![]()

▢

14.5.1: Relationship between correlation and slope

The relationship is

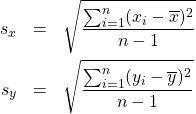

![]()

where

are the standard deviations of the ![]() and

and ![]() datasets considered separately.

datasets considered separately.