12. ANOVA

12.2 Post hoc Comparisons

If ![]() is rejected in a one-way ANOVA, you will frequently want to know where the differences in the means are. For example if we tested

is rejected in a one-way ANOVA, you will frequently want to know where the differences in the means are. For example if we tested ![]() and rejected

and rejected ![]() in a one-way ANOVA then we will want to know if

in a one-way ANOVA then we will want to know if ![]() or

or ![]() , etc.

, etc.

To see which means are different after doing an ANOVA we could just compare all possible combinations of pairs using ![]() -tests. But such an approach is no good because the assumed type I error rates,

-tests. But such an approach is no good because the assumed type I error rates, ![]() , associated with the

, associated with the ![]() -tests would be wrong. The

-tests would be wrong. The ![]() rate would be higher because in making such multiple comparisons you incur a greater chance of making an error.

rate would be higher because in making such multiple comparisons you incur a greater chance of making an error.

So we need to correct our test statistic and/or the corresponding ![]() value when we do such multiple comparisons. We will cover two such multiple comparison approaches in detail :

value when we do such multiple comparisons. We will cover two such multiple comparison approaches in detail :

- Scheffé test

- Tukey test

and we will look at the Bonferroni approach.

Doing multiple comparisons after an ANOVA is known as post hoc testing. It is the traditional approach for comparing several means. The opening “omnibus” ANOVA lets you know if there are any differences at all. If you fail to reject the ANOVA ![]() then you are done. Only when you reject

then you are done. Only when you reject ![]() do you put in the effort of comparing means pairwise. This traditional approach, designed to minimize the necessary calculations, is not the only way to compare multiple means. The other approach is to forget about the ANOVA and then use

do you put in the effort of comparing means pairwise. This traditional approach, designed to minimize the necessary calculations, is not the only way to compare multiple means. The other approach is to forget about the ANOVA and then use ![]() -tests to compare means pairwise on in combinations[1] of means until you use up the

-tests to compare means pairwise on in combinations[1] of means until you use up the ![]() degrees of freedom in the dataset. Here we will stick with the traditional approach.

degrees of freedom in the dataset. Here we will stick with the traditional approach.

12.2.1 Scheffé test

The test statistic for the Scheffé test is

![Rendered by QuickLaTeX.com \[ F_{s,{\rm test}} = F_{s} = \frac{(\bar{x}_{i} - \bar{x}_{j})^{2}}{s_{\rm W}^{2} \left( \frac{1}{n_{i}} + \frac{1}{n_{j}} \right)} \]](https://openpress.usask.ca/app/uploads/quicklatex/quicklatex.com-d977b18b64d1dfcd46a9a96a8387746b_l3.png)

Note that ![]() is basically a

is basically a ![]() quantity (recall that

quantity (recall that ![]() ) but with a pooled estimate

) but with a pooled estimate ![]() of the common population variance

of the common population variance ![]() given by the value of

given by the value of ![]() from the ANOVA. In other words

from the ANOVA. In other words ![]() uses information from all of the data to estimate

uses information from all of the data to estimate ![]() instead of from just groups

instead of from just groups ![]() and

and ![]() as a

as a ![]() -test would (see Equation 10.5). Note that the Scheffé test does not require equal group sizes

-test would (see Equation 10.5). Note that the Scheffé test does not require equal group sizes ![]() .

.

The critical statistic is a modification of the critical statistic from the ANOVA is

![]()

where ![]() and

and ![]() are the ANOVA degrees of freedom. The critical statistic is the same for all pairwise comparisons regardless of the sample sizes,

are the ANOVA degrees of freedom. The critical statistic is the same for all pairwise comparisons regardless of the sample sizes, ![]() and

and ![]() , of the pair of groups being compared.

, of the pair of groups being compared.

Example 12.2 : The ANOVA of Example 12.1 found that at least one of the three means was different from the others. Use the Scheffé test to find the significant differences between the means. There has to be at least one.

Solution :

0. Data reduction.

Collect the necessary information from the omnibus ANOVA. We’ll need:

![]()

![]()

1. Hypotheses.

There are 3 hypotheses pairs to test :

![]()

![]()

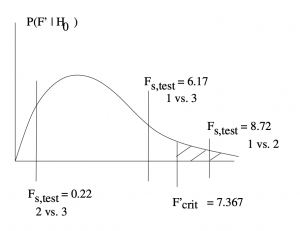

2. Critical statistic.

One value for all three hypothesis tests:

![]()

3. Test statistic.

There are three of them:

![]() vs.

vs. ![]() :

:

![Rendered by QuickLaTeX.com \[F_{s} = \frac{(\bar{x}_{1} - \bar{x}_{2})^{2}}{s_{\rm W}^{2} \left( \frac{1}{n_{1}} + \frac{1}{n_{2}} \right)}=\frac{(15.5 - 4.0)^{2}}{45.5 \left( \frac{1}{6} + \frac{1}{6} \right)} = 8.72\]](https://openpress.usask.ca/app/uploads/quicklatex/quicklatex.com-024f51ad4d1e882d5918ca5eeb297f1c_l3.png)

![]() vs.

vs. ![]() :

:

![Rendered by QuickLaTeX.com \[F_{s} = \frac{(\bar{x}_{1} - \bar{x}_{3})^{2}}{s_{\rm W}^{2} \left( \frac{1}{n_{1}} + \frac{1}{n_{3}} \right)}=\frac{(15.5 - 5.83)^{2}}{45.5 \left( \frac{1}{6} + \frac{1}{6} \right)} = 6.17\]](https://openpress.usask.ca/app/uploads/quicklatex/quicklatex.com-1b377e32fdbbff5ab2003b08efb6a84a_l3.png)

![]() vs.

vs. ![]() :

:

![Rendered by QuickLaTeX.com \[F_{s} = \frac{(\bar{x}_{2} - \bar{x}_{3})^{2}}{s_{\rm W}^{2} \left( \frac{1}{n_{2}} + \frac{1}{n_{3}} \right)} =\frac{(4.0 - 5.83)^{2}}{45.5 \left( \frac{1}{6} + \frac{1}{6} \right)} = 0.22\]](https://openpress.usask.ca/app/uploads/quicklatex/quicklatex.com-adc9cf1fb66e1d6f2258348c9a7799b9_l3.png)

4. Decision.

For ![]() vs.

vs. ![]() , reject

, reject ![]() . For

. For ![]() vs.

vs. ![]() , do not reject

, do not reject ![]() . For

. For ![]() vs.

vs. ![]() , do not reject

, do not reject ![]() .

.

5. Interpretation.

The results of the Scheffé test at ![]() conclude that only the mean numbers of interchange employees between toll roads 1 and 2 are significantly different.

conclude that only the mean numbers of interchange employees between toll roads 1 and 2 are significantly different.

▢

12.2.2 Tukey Test

The test statistic for the Tukey test is

![Rendered by QuickLaTeX.com \[q = \frac{|\bar{x}_{i} - \bar{x}_{j}|}{\sqrt{s_{\rm W}^{2}/n}}\]](https://openpress.usask.ca/app/uploads/quicklatex/quicklatex.com-97e4bd31070c411e75064a97cdbcbc6b_l3.png)

where, again, ![]() is from the omnibus ANOVA,

is from the omnibus ANOVA, ![]() is the mean of group

is the mean of group ![]() and we must have equal sample sizes for all groups:

and we must have equal sample sizes for all groups: ![]() for all

for all ![]() . There is a Tukey test statistic for unequal

. There is a Tukey test statistic for unequal ![]() , and it is used by SPSS, but we won’t cover that here.

, and it is used by SPSS, but we won’t cover that here.

The critical statistic, ![]() , comes from a table of critical values from a new distribution called the

, comes from a table of critical values from a new distribution called the ![]() distribution. The critical values are tabulated in the Tukey Test Critical Values Table. To use this table, you need two numbers going in :

distribution. The critical values are tabulated in the Tukey Test Critical Values Table. To use this table, you need two numbers going in :

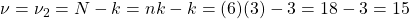

= number of groups

= number of groups = degrees of freedom for

= degrees of freedom for

Example 12.3 : Repeat Example 12.2 using the Tukey test instead of the Scheffé test.

Solution : 0. Data Reduction.

We use the same data from the omnibus ANOVA :

![]()

![]()

1. Hypotheses.

The 3 hypotheses pairs to test are the same :

![]()

![]()

2. Critical statistic.

Use the Tukey Test Critical Values Table. Go into the table with

- Number of groups =

.

.  .

.

and ![]() to find

to find

![]()

3. Test statistic.

Again, there are three of them :

![]() vs.

vs. ![]() :

:

![Rendered by QuickLaTeX.com \[ q = \frac{| \overline{x}_{1} - \overline{x}_{2} |}{\sqrt{s_{W}^{2}/n}} = \frac{| 15.5 - 4.0 |}{\sqrt{45.5/6}} = 4.17 \]](https://openpress.usask.ca/app/uploads/quicklatex/quicklatex.com-c5cb4330bb772b2113f2f4efab54ed3b_l3.png)

![]() vs.

vs. ![]() :

:

![Rendered by QuickLaTeX.com \[ q = \frac{| \overline{x}_{1} - \overline{x}_{3} |}{\sqrt{s_{W}^{2}/n}} = \frac{| 15.5 - 5.83 |}{\sqrt{45.5/6}} = 3.51 \]](https://openpress.usask.ca/app/uploads/quicklatex/quicklatex.com-a2e6ad4949d1d18f66c08dd02c97d95e_l3.png)

![]() vs.

vs. ![]() :

:

![Rendered by QuickLaTeX.com \[ q = \frac{| \overline{x}_{2} - \overline{x}_{3} |}{\sqrt{s_{W}^{2}/n}} = \frac{| 4.0 - 5.83 |}{\sqrt{45.5/6}} = 0.66 \]](https://openpress.usask.ca/app/uploads/quicklatex/quicklatex.com-0254a10c188511c3482bae9eefc70057_l3.png)

4. Decision.

Reject ![]() when

when ![]() . This only happens for one hypothesis pair : For

. This only happens for one hypothesis pair : For ![]() vs.

vs. ![]() , reject

, reject ![]() . For

. For ![]() vs.

vs. ![]() , do not reject

, do not reject ![]() . For

. For ![]() vs.

vs. ![]() , do not reject

, do not reject ![]() .

.

5. Interpretation.

The results of the Tukey test at ![]() conclude that only the mean numbers of interchange employees between toll roads 1 and 2 are significantly different. (Same result as the Scheff

conclude that only the mean numbers of interchange employees between toll roads 1 and 2 are significantly different. (Same result as the Scheff![]() test. Usually this happens but when it doesn’t, you need to use some kind of non-mathematical judgement.)

test. Usually this happens but when it doesn’t, you need to use some kind of non-mathematical judgement.)

12.2.3 Bonferroni correction

A more conservative (less power) approach to multiple comparisons (post hoc testing) is to use Bonferroni’s method. The fundamental idea of the Bonferroni correction is to add the probabilities of making individual type I errors to get an overall type I error rate.

Implementing the idea is simple. Do a bunch of ![]() -tests and multiply the

-tests and multiply the ![]() -value by a correction factor

-value by a correction factor ![]() . There are a number of ways to choose

. There are a number of ways to choose ![]() (you will have to dig to find out which method SPSS uses). The easiest (and most conservative) is to set

(you will have to dig to find out which method SPSS uses). The easiest (and most conservative) is to set ![]() equal to the number of pairwise comparisons done. So if you have

equal to the number of pairwise comparisons done. So if you have ![]() groups then

groups then ![]() is given by the binomial coefficient:

is given by the binomial coefficient:

![]()

Another way is to look at the total degrees of freedom, ![]() , associated with the pairwise

, associated with the pairwise ![]() -tests and compare it to the total degrees of freedom in the data,

-tests and compare it to the total degrees of freedom in the data, ![]() (or one could argue

(or one could argue ![]() ), to come up with

), to come up with

![]()

Since there is some ambiguity as to what we should use for ![]() , we will not do Bonferroni post hoc testing by hand. However, be able to recognize Bonferroni results in SPSS, treating the value of

, we will not do Bonferroni post hoc testing by hand. However, be able to recognize Bonferroni results in SPSS, treating the value of ![]() as an SPSS blackbox parameter.

as an SPSS blackbox parameter.

- Combinations of means may be compared using "contrasts". For example

might be compared with

might be compared with  . Contrasts are not covered in Psy 234. ↵

. Contrasts are not covered in Psy 234. ↵