17. Overview of the General Linear Model

17.1 Linear Algebra Basics

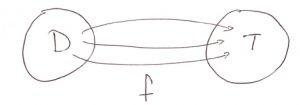

At its most abstract level modern mathematics is based on set theory. Functions, , are maps that map an element in a domain set,

, to a target,

.

The range of of is the set

, the set of all possible values of

. Note that the range is a subset of the target, in set notation symbols:

where

means subset.

17.1.1 Vector Spaces

We specialize immediately to special sets called vector spaces and denote these sets by . Here

is the dimension of the vector space. Some examples :

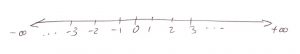

= the set of real numbers = the number line :

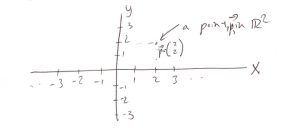

= the set of all pairs of real numbers written as a column vector.

We have introduced some set symbol notation here. The basic notion for a set uses curly brackets with a dividing line:

The dividing line | is read as “such that”, and the set symbol is read as “belongs to”, so you would read the set defining

above as: “the set of column vectors such that

and

belong to the set

“.

The transpose of a column vector is an operation written as

…which is known as a row vector. The transpose of a row vector is a column vector.

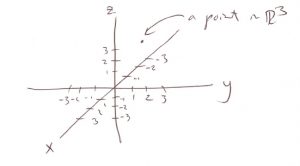

Continuing with higher dimensions:

In general we have dimensional space[1]:

Notice that we are using the symbol to abstractly represent a column vector.

17.1.2 Linear Transformations or Linear Maps

In general we can define maps, , from

:

We will use the following abstract notation for a map: where

,

—

gets mapped to

by

in this example.

A linear map or a linear transformation is a map that abstractly satisfies :

…where and

(the domain of

). What this statement says is that, for a linear map, it does not matter if you do scalar multiplication and/or vector addition before (in

) or after (in

) the map

, the answer will be the same. Scalar multiplication and vector addition[2] are defined as follows, using example

:

It turns out that any linear map from to

can be represented by an

(rows

columns) matrix. Let’s look at some examples.

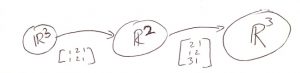

Example 17.1 : A map from to

.

Here is a 1

2 matrix that defines a linear map

. The map

takes the column vector

to the number

in

. For example, the vector

gets mapped to 8. Notice how the matrix is applied to the vector. The row of the matrix is is matched to the column of the vector, the numbers are multiplied and then the column added.

▢

Example 17.2 : A map from to

.

Note that gives us a nice compact way of writing the two equations:

Linear algebra’s major use is to solve such systems of linear equations. Let’s try some numbers in . Say

, then:

…so gets mapped to

.

▢

Example 17.3 : A map from to

Notice that the size of the matrix is 2 3 to give a map from

to

. Again this is shorthand for

Let’s look at some numbers. Say , then:

…so gets mapped to

.

▢

Exercises

Compute:

17.1.3 Transpose of Matrices

Just like vectors, matrices have a transpose where row and columns are switched. For example

Note how, for square matrices (where the number of rows is the same as the number of columns), that transpose results in flipping numbers across the diagonal of the matrix.

17.1.4 Matrix Multiplication

An matrix can be multiplied with a

matrix to give an

matrix. For example, we can multiply a

matrix with a

matrix to give a

matrix:

Notice how the sizes of the matrices match so that the number of columns in the first matrix () matches the number of columns in the second matrix — the

‘s kind of cancel to give the resulting

answer.

Matrix multiplication represents a composition of linear maps. In the above example the situation is:

Note that the matrix on the right is applied first. (If you wanted to apply the matrices to a vector in , you would would write the vector on the right.)

When you multiply two square matrices and

(both

) then, in general,

Exercises

Compute:

and

to see that the results are different.

17.1.5 Linearly Independent Vectors

From an abstract point of view, a set of p vectors

in are said to be linearly independent if the equation

has only one solution:

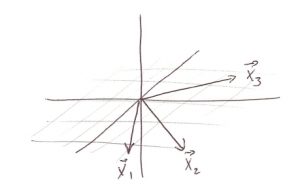

When vectors are linearly independent, you cannot express one vector as a linear combination of the other vectors. Geometrically (for example in ) :

If ,

and

are all in the same plane then they are not linearly independent. In that case we could find

and

such that

.

In an dimensional space it is possible to take, at most, a set of

linearly independent vectors.

17.1.6 Rank of a Matrix

Define :

Row rank = the number of linearly independent row vectors in a matrix.

Column rank = the number of linearly independent column vectors in a matrix.

It turns out that:

row rank = column rank = rank

We won’t cover the mechanics of how one calculates the rank of a matrix (take a linear algebra course if you want to know). Instead we just need to understand intuitively what the rank of a matrix means. Consider some simple examples :

Example 17.4 : The matrix

has rank = 1 because one column is a multiple of the other:

▢

Example 17.5 : The matrix

has rank = 2 because there is no way to find such that

▢

Example 17.6 : The matrix

has rank = 3.

▢

Example 17.7 : The matrix

has rank = 2 since

▢

17.1.7 The Inverse of a Matrix

For some square matrices ()

it is possible to find an inverse matrix,

so that

where is the identity matrix that has 1 on the diagonal and 0 everywhere else.

For example, in :

In :

In :

…etc.

Again, we won’t learn how to compute the inverse of a matrix but it is important to know that an matrix

will have an inverse

if and only if rank

.

17.1.8 Solving Systems of Equations

In general a system of linear equations can be represented by

where ,

and

is an

matrix known as the {\em coefficient matrix}. Here

represents the known values and

represents the unknown values.

There are 3 cases:

, less equations than unknown. No unique solution.

, number of equations = number of unknowns.

- Rank

, no unique solution. This is really the same as case 1 because at least one of the equations is redundant.

- Rank

. This has the unique solution

.

- Rank

, inconsistent formulation, no solution possible.

- Rank

(

is of full rank). A least squares solution is possible and is given by :

That last least squares solution is the punchline to this very quick overview of linear algebra. It is derived using differential calculus in the same way that least squares solutions were derived for linear and multiple regression. The existence of this least squares solution allows us to unify many statistical tests into one big category called the General Linear Model.

- The number

will also mean sample size later on because you can organize a data set into a column vector of dimension

. In fact, you give SPSS a data vector by entering a column of numbers as a "variable" in the input spreadsheet. ↵

- Abstractly, a vector space is a set where scalar multiplication and vector addition can be sensibly defined. ↵