11 Ease, Precision, and Adaptability: Creating Videos with AI

Jonathan Nickel

Abstract

Video production was once a difficult and time-consuming enterprise beyond the ability of most instructional designers. The embedding of AI into video-creation tools has made the process accessible even to novices. Many generative AI applications use a text-to-video interface to produce high-quality engaging videos. Invideo AI is one such tool that helps users create content-focused videos quickly and easily which remain adaptable into the future. AI-generated video production is a rapidly developing field holding great promise for educational technology, yet there are concerns about ethical and responsible use. As Generative AI tools evolve, it is important for research and oversight to keep pace.

Figure 1

Instructional Designer uses AI to Create Engaging Content for Learners

Introduction

We live in a world where the presence of screens and videos have become almost an assumption in every aspect of life. Screens are in homes, shopping centers, sports facilities, and restaurants; they are embedded into phones, vehicles, and appliances. With the evolution and adoption of screens in so many places, people have come to expect and depend on the medium. As society has embraced video, it has also come to be an important component of teaching and learning (Draus et al., 2014; Guo et al., 2014). Incorporating video into instructional design increases engagement of modern learners and can help a course seem current and relevant (Carmichael et al., 2018). Formats like TedTalks and MasterClass have set certain standards for educational videos, but TikTok, YouTube shorts, and others have joined the fray, seeking views among would-be learners. It is difficult to compete with the dynamic and engaging content that learners are exposed to through online videos: most instructors simply do not have the time to find or create engaging videos that specifically address content needs (Draus et al., 2014; Sherer & Shea, 2011). However, emerging AI tools show great potential in this area.

There are now many Generative Artificial Intelligence (GAI) tools that can help instructional designers create videos that address precisely targeted content and users (Zhou et al., 2024). Invideo AI is one such tool that greatly eases the burden of video creation. Users can create high-quality videos directly from a prompt about any topic, allowing even the inexperienced to tap into this valuable resource. GAI bears the load of video creation, effectively managing all the time-consuming details of production (Zhou et al., 2024). Through vague or detailed prompts, Invideo AI allows instructional designers to create original and targeted videos which will engage learners in their courses.

Learning Objectives

Learning Objectives

- Understand how using GAI video tools such as Invideo AI can improve learner engagement.

- Know how GAI can help users create precise, focused, and adaptable videos with relative ease.

- Evaluate the potential benefits and risks of AI video tools.

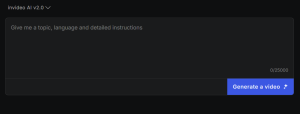

Case Study

Video use is demonstrated to broaden participation, while affecting motivation, emotional engagement, and overall course engagement positively (Carmichael et al, 2018; Sherer & Shea, 2011). Unfortunately, it can be difficult for educators and designers to find videos that are appropriately focused and effective while being legally accessible. In response, these challenges may lead some instructors to make their own videos. This seems to be a good response to the challenges as the use of instructor-generated videos seems particularly valuable to learner engagement and course satisfaction (Draus et al., 2014). Challenges remain however as video creation has typically been an arduous process, and educators already have many demands on their attention, time, and energy. The integration of large language models (LLMs) and GAI into video creation has already developed the field significantly, and instructional designers can benefit in very specific ways: GAI has made video creation much simpler and less time-consuming; it allows designers to create videos that are precision focused; and GAI helps keep videos relevant by facilitating easier adaptation and revision (see figure 2). These will be discussed in the following sections specifically in the context of one GAI video tool: Invideo AI.

Figure 2

Three Primary Benefits of Generative AI Video Production

Note. Jonathan Nickel (2024) generated this figure using the Microsoft 365 platform. I dedicate any rights I hold to this figure to the public domain via CC0.

Efficient and Easy

Digital video has been around for over a decade, but creating a video from an assortment of clips has remained time-consuming, and that is if one has access to good raw video clips to begin with. The incorporation of AI into video generation has made the process almost ridiculously quick. There are a few key features that have simplified and sped up the process and have become quite common among GAI video applications.

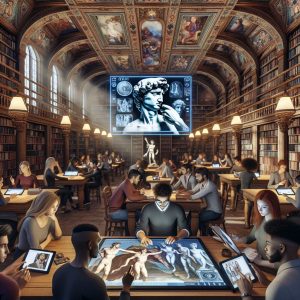

Perhaps the most significant time-saving feature of Invideo AI is text-to-video generation which is almost as simple as it sounds. New users of Invideo AI are presented with a box in which to write their prompts (see Figure 3). In the simplest approach, users can type any topic, and AI will create a video with script, visuals, subtitles, voiceover, and music (Invideo, 2024). For example, a user could prompt Invideo AI to “generate a video about Michelangelo”, and it will create a very acceptable video about the Renaissance master with an original script using stock images, footage, and narrator’s voice. The video may not be exactly what the user had in mind, but the result can be modified in different directions and to different extents by adjusting and adding to the prompt.

*

Figure 3

Prompt Box from Invideo AI

Note. Screenshot used with permission from Invideo AI.

Users can begin from or make changes to videos by giving prompts to guide Invideo AI as if it were a film editor and they were the director. In this way they can specify the video length and format, but they might also ask AI to alter or replace the narrator’s voice, add subtle humor, or use clips with college-age students. Invideo AI can respond appropriately to almost any prompt, yet if very specific changes are desired, users can still use the edit function to manually adjust any part of the script, score, or video clips themselves. Each of these things take time, but AI makes the process much faster than traditional editing.

As with most AI tools, the power is in the prompt. Prompts might be considered as pointing AI toward a certain target: the more details that AI is given, the closer it will get to the intended target or end goal. AI video tools will hit the desired target more precisely and quickly as instructional designers learn to describe their desired finished product accurately.

Precise and Focused

With so much video content online, it may seem that every possible topic must be available somewhere. However, an instructor may have a very specific focus for which no appropriate video is available. Existing videos may be too long and go beyond what an instructor desires, or they may be too short and miss the mark of what an instructor intends. When it comes to video content, precision and focus are something of a holy grail for instructional designers. To justify the effort of custom video creation, it is important for educators to know that a finished product will be focused appropriately. “Generative AI allows its users to pay more attention to the strategic aspect of video making” (Nyame & Bengesi, 2024, p.2). Along with Invideo AI’s ability to create a video styled according to the designer’s prompts, it is also able to precisely tailor content in a similar way.

As mentioned already, video creation begins from a prompt. That prompt can be as specific or vague as the designer is willing or able to provide. Users can simply ask Invideo AI to create a video on any topic and it will produce an acceptable one within minutes. However, as with style direction, pointing AI more specifically toward a target will produce a more accurate result. Users can have Invideo AI base a video on information from a specific website or link. They can include course notes or even a complete lecture script in their prompt as the basis for the video. With these approaches, Invideo AI will create the desired video using their vast library of stock footage, images, voices, and music.

Vignette Part 1

Sarah, an educator in Renaissance art history, decides to use Invideo AI to create a video about Michelangelo for learners to use as a study tool in her course. She starts simply with the prompt: “generate a three-minute video about Michelangelo”. Sarah is quite pleased with the resulting video which incorporates appropriate clips of Michelangelo’s work. However, she finds the script too biographical with not enough description or discussion of his work. She had also forgotten to specify her desire of a female voice for narration. In the prompt she changes the narrator’s voice and adds a link to a website she likes which is focused less on his life and more on his art. The second video is much better than the first and addresses the issues as she intended while adding a pleasant female voice for narration. Sarah downloads the video and shares it with her students.

If designers want to take things further, they can upload their own clips and images to incorporate into videos. Invideo AI is also able to clone voices: designers can then select their own voice for video narration. With these capabilities, Invideo AI allows users to make precision-focused personalized videos that are tailored to very specific needs.

Adaptability

There are a few issues that have typically accompanied media and technology. High-quality media is difficult to modify for additional audiences from other cultures for example. It also may look old or dated over time; that is, the style or details may not age well. Invideo AI and GAI video productions hold great promise in these areas.

First, Invideo AI is able to produce videos in various languages directly from the initial prompt. Designers can also use Invideo AI to translate previously produced videos also able to take a video they have made and have Invideo AI produce a version in another language. This allows designers to present their work to diverse audiences or to address specific language needs they have among their clientele.

As time progress, contexts may change in our world: styles evolve, new information comes to light, what was acceptable may cease to be so, and technology continues to improve. If for any reason designers find that their videos are not aging well, they can go back and update them quite easily. Invideo AI users can edit their videos to make specific changes to script, clips, or style at any time to keep them current and relevant. A designer could also choose to create an entirely new video using the same prompts. Along with this, LLMs will continue to contribute to GAI technologies allowing for adaptable content delivery and more user-centric experiences (Zhou et al., 2024). Stock libraries will continue to grow and AI will continue to develop which means that an app such as Invideo AI will be able to make even better videos as time goes on.

Vignette Part 2

In her first term class, Sarah’s students really appreciated the video she had made. She is now teaching the course a second time and has subscribed to the less expensive paid version of Invideo AI, so Sarah decides to update her Michelangelo video. She chooses some different video clips, adds some images and clips from her own travels, and focuses the prompt for the video more precisely to her content. Sarah has cloned her own voice, and the new video will sound like it has been narrated by her. However, her current class has several recent newcomers from Ukraine, so Sarah also decided to translate the video into Ukrainian. Her friend, a native Ukrainian speaker, reviews the translation and is impressed with the finished video. Sarah removes the watermarks from the English and Ukrainian versions, downloads them and shares them out to her class.

Pricing

It is worth noting that Invideo AI has three plan levels. The basic plan is free and includes all the capabilities mentioned above. However, videos produced in the free plan will include Invideo AI watermarks and lack some impressive features of the paid plans. Paid plans remove watermarks, give users more space for their own uploaded content, and allow for more personalized narration through voice-cloning.

Responsible use of AI

As with any use of AI, it is important to be aware of potential issues before beginning and while using these tools. The ease of using text-to-video apps such as Invideo AI is accompanied by the potential inclusion of inaccurate information. It is very important that designers ensure that their videos include only accurate and appropriate information. Issues could arise simply from inaccuracy, but also from a perpetuation of bias or stereotypes due to the Large Language Models (LLM) on which AI tools are trained (Ferrara, 2024). These could appear incidentally and/or accidentally in both information and images that tools incorporate into videos. Designers must take great care to verify their choices and those of AI to avoid these scenarios.

There is also the potential for AI videos to be used intentionally for spreading biases, false information, or for more nefarious purposes (Ferrara, 2024). While one would hope that instructional designers would never choose to do this, the ease of use and powerful abilities of AI might tempt a user to create harmful material. Invideo AI and other apps can quickly produce impressive products in which the distinction between reality and artificial is hazy at best (Nyame & Bengesi, 2024). By incorporating a few different tools, a relatively inexperienced designer could cause real damage with GAI video creation by violating privacy or intellectual property rights, falsifying information, or using deepfakes in a variety of frightening directions (Zhou et al., 2024).

AI is a very powerful tool that has the potential to reduce workload and speed the process of many tasks. However, one must remember that it is a tool. As with any tool, it should be used responsibly and with care: there is always a chance of damage or injury being done. In moving forward with AI-powered video production it will be important to balance innovation, regulation, and responsible ethical practices (Nyame & Bengesi, 2024; Wallach & Marchant, 2019). Designers who choose to use AI tools to create videos will likely be impressed with the results and speed with which a product can be delivered. However, these tools are best used by a cautious and well-trained hand.

future research and innovation

The field of AI-powered video creation would benefit from additional research in several directions. As discussed earlier, video use is well-documented to support learner motivation and engagement (Carmichael et al, 2018; Sherer & Shea, 2011); less is known however about what specific video qualities or details contribute to that effect. For example, while research suggests that shorter videos are more effective, there is not a solid consensus on optimal length, and questions remain about the relationship between run-time and engagement (Guo et al. 2014). Similar questions could be considered for each of the many aspects of video and GAI-video production. The cognitive theory of multimedia (CTML) learning attempts to “explain how people learn academic material from words and graphics” (Mayer, 2024, p.1), and CTML research has led to many principles for multimedia instructional design (see Table 1 for descriptions of several of these principles and how they might be applied to GAI video production). Other research has provided recommendations for visual, verbal, and text-based video components while also suggesting that the use of an instructor’s image or ‘presence’ in videos seems to be more engaging for learners (Carmichael et al., 2018). There is significant potential benefit to be gained from accurately determining any specific best practices in video production, especially along lines of self-regulated learning and motivation (Mayer, 2024). These and other ideas deserve attention in research especially within the context of GAI-powered video production.

Table 1

CTML principles and potential application to GAI video production

| Mayer’s Principle | Description | Application to GAI Video Production |

| Dual-Channel | Humans process information through separate channels for visual and auditory input. | Ensure GAI videos use both visual elements (animations, graphics) and auditory elements (narration, sound effects) to enhance learning. |

| Limited Capacity | Each channel has a limited capacity for processing information. | Avoid overloading viewers by keeping videos concise and focused, using clear and simple visuals and audio. |

| Active Processing | Learning involves actively selecting, organizing, and integrating information. | Design GAI videos to encourage interaction, such as pausing for reflection or including prompts for viewers to think about the content. |

| Coherence Principle | Exclude extraneous content that does not support the learning goal. | Keep GAI videos free from unnecessary information or distractions to maintain focus on key concepts. |

| Redundancy Principle | People learn better from graphics and narration than from graphics, narration, and on-screen text. | Use narration to explain visuals rather than adding redundant on-screen text, unless the text is essential for understanding. |

| Segmenting Principle | Learners fare better when information is presented in learner-paced segments. | Break down GAI videos into manageable segments, allowing viewers to control the pace of their learning. |

| Personalization Principle | Conversational style is preferable to most over a formal style. | Use a friendly, conversational tone in GAI video narrations to make the content more relatable and engaging. |

| Generating Principle | Learners retain and understand better when asked to respond to material by generating their own content or explanations. | Provide/suggest opportunities in GAI videos for viewers to create their own summaries, examples, or explanations of the content presented. |

Note: This table was designed using the Copilot platform and incorporates Mayer’s (2024) CTML principles.

Current AI-powered video apps are already very powerful, efficient, effective, and have been adopted broadly. Invideo AI states that they had over 24 million customers from 190 countries as of September, 2024 (Invideo, 2024), and this is just one among many AI video apps. While this rapid adoption, acceptance, and increase in use is exciting for instructional designers, it is also reason to proceed with a degree of caution. While new and powerful technologies may immediately impress, such rapid development may at times outpace humans’ ability to prepare appropriately for their use (Critch & Kreuger, 2020; Wallach & Marchant, 2019). There is a growing awareness of the need for policies and oversight that can keep pace the development and evolution of AI (Duenas & Ruiz, 2024; Walter, 2023). Humans will need to reconcile the current pace of development and use of AI-powered video generation with the associated potential risks (Goldfarb, 2024; Zhou et al., 2024). “Because of its capacity to produce incredibly realistic and compelling visual content, it may be used for both good and bad, emphasizing the necessity of continuing research and creating strong safety measures to reduce the hazards connected with this technology” (Nyame & Bengesi, 2024, p. 7). AI-generated video and GAI will continue to evolve rapidly, and potential developments are mind-boggling. Video and AI will likely continue their current trajectory showing up in mundane and surprising spaces independently and together. Exciting new uses of GAI will appear frequently, and it is vital that effective use, research, and oversight keep pace.

Figure 3

People Engage with New and Old Ways of Learning

Note. Jonathan Nickel (2024) generated this image using the Copilot platform. I dedicate any rights I hold to this image to the public domain via CC0.

Summary

Generative AI Video Creation

Video Player

Note. This video was produced by Jonathan Nickel using Invideo AI.

Disclaimer

My video summary was made with the free version of InvideoAI. As such it includes copyrighted images and video clips which are watermarked. My choice was intentional as I expect that many educators (and students) who use InvideoAI will choose the free version, and I wanted to give a realistic example of what is possible with it. However, I also want to acknowledge the ethical questions relating to intellectual property that arise with my choice. As you consider using InvideoAI or other creative tools, ask yourself:

Does my intended use fall within “fair use” for educators?

What is the difference between fair use and copyright infringement?

Do you think it is fair for creators to be compensated for their work?

What does it mean to respect intellectual work?

What are the long-term consequences of normalizing copyright infringement?

As AI-powered creative tools continue to expand their capabilities, I encourage you to consider the impact our use has on the evolution of this exciting field.

Acknowledgements

I would like to thank Dr. Paula MacDowell and peers from the University of Saskatchewan Educational Technology and Design 873 course for their helpful suggestions in preparing this chapter. Special thanks to Marena Duffus, who provided significant detailed feedback to help improve my chapter.

Thanks to Invideo AI for the inspiration and permissions they gave me to pursue this topic.

Generative AI on various platforms was used to generate images, develop Table 1, and to ensure my work was consistent with APA 7 formatting. The summary video was created using Invideo AI.

Open Researcher and Contributor ID (ORCID)

Jonathan Nickel https://orcid.org/0009-0003-2816-981X

Jonathan has been involved in education for more than two decades. He is currently working on his Master’s in Educational Technology and Design at the University of Saskatchewan in Canada. Jonathan’s research interests center on how and where meaningful learning takes place.

References

Carmichael, M., Reid, A., & Karpicke, J. D. (2018). Assessing the impact of educational video on student engagement, critical thinking and learning. A SAGE white paper.

Critch, A., & Krueger, D. (2020). AI research considerations for human existential safety (ARCHES). ArXiv. https://doi.org/10.48550/arXiv.2006.04948

Draus, P. J., Curran, M. J., & Trempus, M. S. (2014). The influence of instructor-generated video content on student satisfaction with and engagement in asynchronous online classes. MERLOT Journal of Online Learning and Teaching, 10(2), 240-250.

Duenas, T., & Ruiz, D. (2024). The path to superintelligence: A critical analysis of openai’s five levels of AI progression.

Ferrara, E. (2024). GenAI against humanity: Nefarious applications of generative artificial intelligence and large language models. Journal of Computational Social Science, 7(3), 549–569. https://doi.org/10.1007/s42001-024-00250-1

Goldfarb, A. (2024). Pause artificial intelligence research? Understanding AI policy challenges. Canadian Journal of Economics/Revue canadienne d’économique, 57(2), 363-377. https://doi.org/10.1111/caje.12705

Guo, P., Kim, J., & Rubin, R. (2014). How video production affects student engagement: An empirical study of MOOC videos. https://doi.org/10.1145/2556325.2566239

Hosseini, D. D. (2024, February 3). Generative AI: a problematic illustration of the intersections of racialized gender, race, ethnicity. https://doi.org/10.31219/osf.io/987ra

InVideo. (2024). AI Video Creation Platform. InVideo. https://invideo.io/ai/

Mayer, R. E. (2024). The past, present, and future of the cognitive theory of multimedia learning. Educational Psychology Review, 36(1), 8. https://doi.org/10.1007/s10648-023-09842-1

Nyame, L., & Bengesi, S. (2024). Generative Artificial Intelligence Trend on Video Generation. Preprints. https://doi.org/10.20944/preprints202409.0195.v1

Seo, K., Dodson, S., Harandi, N. M., Roberson, N., Fels, S., & Roll, I. (2021). Active learning with online video: The impact of learning context on engagement. Computers & Education, 163, 104119. https://doi.org/10.1016/j.compedu.2020.104119

Sherer, P., & Shea, T. (2011). Using online video to support student learning and engagement. College Teaching, 59(2), 56-59. https://doi.org/10.1080/87567555.2010.511313

Wallach, W., & Marchant, G. (2019). Toward the Agile and Comprehensive International Governance of AI and Robotics [point of view]. Proceedings of the IEEE, 107(3), 505-508. https://doi.org/10.1109/JPROC.2019.2899422

Walter, Y. (2024). Managing the race to the moon: Global policy and governance in artificial intelligence regulation—A contemporary overview and an analysis of socioeconomic consequences. Discover Artificial Intelligence, 4(14). https://doi.org/10.1007/s44163-024-00109-4

Zhou, P., Wang, L., Liu, Z., Hao, Y., Hui, P., Tarkoma, S., & Kangasharju, J. (2024). A survey on generative ai and llm for video generation, understanding, and streaming. arXiv preprint arXiv:2404.16038.