6 Hacking Saskatchewan Computer Science Curricula with ChatGPT-4, Python, and Soft-tech

Brett Balon

Abstract

This chapter explores the use of AI to streamline instructional design (ID) for computer science (CSC) education in Saskatchewan, addressing challenges posed by diverse student abilities and classroom orientations. By leveraging ChatGPT-4 and Python, educators can generate personalized assignments aligned with curricular outcomes, coding preferences, and skill levels. AI-generated assignments individualize instruction while reducing teacher workload through similarly GPT-4-augmented, Python-powered automated evaluation scripts. Operating locally, the script ensures student data privacy and legal compliance. Access and equity considerations are addressed through a soft-tech approach, emphasizing fairness, transparency, and academic integrity. This balanced approach enhances learner autonomy and fosters a more equitable, sustainable practice for CSC educators in a rapidly evolving field.

The author will provide the reader with a Python script, powered by static AI that has been programmed into the script via iterative rubric training through GPT-4o. It allows teachers to have student coding assignments graded automatically at the moment the they submit them, all kept within a local school network, with granular, aggregate, and customized qualitative feedback provided to students within a password-protected PDF.

Introduction

This whole chapter should be summarized thusly: Upload your students’ CSC assignments to GPT4 along with your rubric. Press send, receive results, post grades to your division’s LMS. However, under no circumstances should any educator ever send student data to a third party corporation across international borders, where the software’s EULA may claim ownership of that data. Compounding this challenge is the fact that educators struggle to authentically evaluate student coding assignments in an age where AI can generate code. A more novel approach to streamlining CSC ID is needed.

GPT-4, being made of code, is an obvious tool to deploy. It addresses this challenge by enabling students to generate customized assignments aligned with curricular outcomes, coding preferences, skill levels, and native languages. It can also generate software that automates evaluation, delivering immediate, tailored feedback. By leveraging AI in this way, CSC ID becomes more adaptable to diverse student needs, reduces burden on teachers, and ensures compliance with legal requirements regarding student data privacy. Also, soft-tech approaches can be utilized to facilitate an authentic and valuable CSC education experience, but may obfuscate the role of the teacher and student in the classroom. However, this obfuscation may be inevitable; no human instructor can possibly be an expert coder in all languages, and as digital natives become increasingly literate, the instructor needs to focus their time and energy towards tasks that facilitate student learning rather than pour through thousands of lines of code that they may or may not understand. Some organized chaos will result, but automated grading software can make it manageable (Figure 1).

Figure 1

A CSC Classroom, Instructor and Students Working Together in Organized Chaos

Note. Brett Balon (2024) generated this image using the DALL-E model via GPT4o. I dedicate any rights I hold to this image to the public domain via CC0.

Learning Objectives

- Understand the role of AI in adapting CSC instruction to varying student skill levels.

- Explore how AI-generated assignments support personalized learning aligned with curricular outcomes.

- Evaluate the challenges and ethical considerations in monitoring AI use in student-generated assignments via soft-tech.

- Learn to generate software using GPT-4 to automate and optimize the evaluation and feedback process.

- Have a working Python script available for readers.

Case Study

Vignette 1 – Enough with the Gatekeeping

As I glanced around the classroom, I could see the benefits of using ChatGPT-4 for personalized assignments. Dani, who had struggled with coding, was focused on a Python project, progressing at her own pace. Meanwhile, Jay, one of my more advanced students, was deep into a complex algorithm well beyond our curriculum. AI allowed each student to work at their skill level, reducing frustration and boredom. When I notice students quickly closing an AI tool, I don’t reprimand them. Instead, I have a conversation about using AI as a learning tool rather than a shortcut.

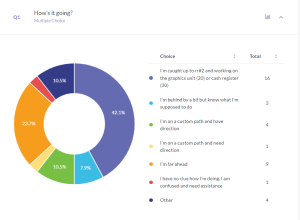

In Saskatchewan CSC classrooms, student abilities vary drastically. Some are far ahead due to digital nativity, prior experience, or innate talent. Others struggle, especially when CSC20/30 are combined into one class, which is the predominant practice in this locale. This creates a stratified classroom where managing individualized instruction becomes a constant challenge. After recently surveying my students (Figure 2) on their perception of progress in one of my blended CSC 20/30 courses, I found that the typical bell curve was replaced by scattered spikes.

Figure 2

Student Perceptions of Progress in a CSC 20/30 Course

To address this, I implemented a system of AI-assisted prompt engineering. Students generate their assignments or projects, which are directly aligned with curricular outcomes and tailored to their individual programming language preferences and coding abilities. These AI-generated assignments allow students to work at their own pace, reducing intimidation or boredom that can accompany a stratified learning environment. Preparing a useful prompt for this purpose can be refined through Feedough.com’s prompt refinement tool, as demonstrated by Sam Roberts’ previous chapter in this textbook (chapter 5).

However, grading these personalized assignments poses a significant challenge. Traditional CSC grading methods – already a mammoth task – are not feasible for such individualized work. To address this, I developed a Python script with the help of GPT-4o, featuring built-in AI evaluation capabilities. Trained on a common CSC rubric, the script can accurately assess any assignment – regardless of programming language or complexity – and give feedback in real time. It also ensures student data privacy by operating entirely within a local school network.

Why GPT-4 and Python? In my opinion, GPT-4, already powered by Python, is the most viable tool for driving this ID shift. While other AI tools may come and go, GPT-4 stands at the forefront of the new age of AI. Importantly, both are free, increasing accessibility to schools that lack resources for expensive software solutions. By using GPT-4 and Python, teachers can access cutting-edge AI technology without needing to allocate funds they likely do not have. Together, they form a natural, standard, reliable, sustainable, accessible, and affordable combination for educational environments that seek to streamline CSC ID.

Lastly, the automated grading software exports granular (assignment and criteria specific) and aggregate (overall average and individualized feedback) data to Excel or comma-separated-values (CSV) file, which can communicate with most learning management systems (LMS). This software has been tested extensively and is open-source for readers via CC0.

Working Python script for automated grading – best used on Windows 11, but can be ported to OSX; I used Thonny to develop this. You may need to install the suggested libraries and packages for full functionality.

Prompt Design for Individualization

The prompt design process for creating student assignments is beautifully simple and can be done by either the student individually, by the instructor prescriptively, or in tandem. Broadly, the student picks an outcome from the curriculum that needs attention or interests them, picks a language of their preference, asks GPT-4 to create an assignment for them that addresses the outcome, and provides a level of difficulty options that can be modified by timeline. Here’s a sample chat for a CSC20 outcome, and here’s a sample chat for a CSC30 outcome.

The instructor should be careful to document which outcomes the student has met; this has not been implemented in the script at this time, but as you will see in the next section, you can generate a script yourself that can keep track of this.

Here is a distillation of the universal rubric that I use. This rubric precludes knowledge of any particular language, focussing instead on the fundamentals of CSC itself.

- Specifications (Does it work? Is it sanitized?): /4

- Readability (Are the variable and array names meaningful?): /4

- Reusability (Can you use this to collaborate, or do you need to start over?) : /4

- Documentation (Comment on everything, plan your process): /4

- Efficiency (Trim the fat! Did you avoid brute forcing and deploy automation through loops?) /4

A Soft-Tech Approach to CSC Classroom Management

CSC students, in an era where AI can generate code, should not be assigned homework. The instructor needs to witness the work being done to be accountable for the evaluation of it. Rather, CSC educators should consider abolishing homework as an ancient practice that does not prioritize student success. I have found a soft-tech approach to be effective in squaring this circle. If the instructor and students are facing the same direction, with the instructor at the back of the room, the instructor can see every computer monitor in the lab. This allows the instructor to witness student work. However, if students are all working on personalized assignments, or students need to be away for a period of time and cannot do homework, then student assignment submission will be staggered. Due dates and deadlines must fall as a result. Axeing deadlines and homework may seem like ID whiplash, but I have found that these policies provide relief for students, knowing that punitive measures will not follow circumstances beyond their control. Learning happens when it happens – not on an instructor’s schedule. We work for the students and not the other way around.

The Evaluation Script: Development, Implementation, & Testing

I have kept all of my experimentation, prompts, resulting code, etc, in these chats. I am still working on this script, and these chats will be continuously updated to provide useful information to readers in the future.

Hacking Saskatchewan Computer Science Curricula with ChatGPT – working Python Script

Testing the software on my local machine before importing to secure school network

Student data security and privacy is a critical point of order. Again, one could upload student CSC assignments to GPT4, along with a rubric and a haphazard prompt, press send, receive results, and post grades to your division’s LMS. Transmitting student data to third-party organizations – with or without consent – is not only unethical but may be illegal. This is why the AI-powered script I developed operates locally, ensuring that student data never leaves a school’s local network.

Vignette 2 – The Hack in Motion

As I sat down to grade the latest batch of assignments, I couldn’t help but feel relieved. Before implementing the AI-powered grading script, this task would have consumed dozens of hours spent manually reviewing line after line of student code. Now, with the script in place, everything has changed. Students generated their own assignments based on their individual coding preferences and skill levels. The script, trained on our CSC rubric, quickly analyzed each submission, returning detailed, personalized feedback in seconds. What used to take me days was now condensed into a few minutes. More importantly, the students were receiving real-time, actionable feedback.

With the script handling the bulk of the grading, I could spend more time focusing on where I was most needed – supporting struggling students and refining instructional strategies. My work is not only more efficient, but more impactful.

Responsible use of AI

Avoiding using AI would have been hypocritical in writing this chapter. All chats I have used can be found below.

- Writing assistance for this chapter

- Generating a CSC assignment for a student

- Generating the Python script for use in work environments

- Generating the Python script for use in home/test environments

FUTURE RESEARCH AND INNOVATION

As AI continues to develop, so too will its applications in ID. Future iterations of AI tools may include features that allow even more personalized and immediate feedback, freeing up teachers to focus on guiding students through complex problem-solving tasks. The role of AI as an evaluator in education could expand significantly, with tools that integrate seamlessly into local school networks while protecting student data. With open-source Python scripts, instructors can, with the assistance of AI, readily generate and edit Python scripts that suit their needs and improve their instruction, automate their evaluation methodologies, and improve student experience.

My next phase for the script that I developed is to adapt the tool to operate in a live-stats way, where students and success stakeholders can see their gradebook updated the moment they complete work. It follows that the software could be developed in a more universal way, with not just a Python script, but actually bundled as an application with a graphic user interface (Figure 3) that can be easily adapted to individual CSC educator needs.

Figure 3

Early Conceptual GUI Design Models for Automatic CSC Software

Note. P1 and P3 stand for class period 1 & 3, corresponding with my current teaching schedule. Brett Balon (2024) created these images via screenshot of a GPT-4o generated running Python script. I dedicate any rights I hold to these images to the public domain via CC0.

Summary

In CSC education, AI tools can enhance student autonomy by allowing them to choose assignments aligned with their preferences and skill levels, boosting motivation. However, teachers should set clear expectations to prevent over-reliance on AI, ensuring that students develop problem-solving and critical thinking skills organically.

This chapter emphasizes AI’s role in assisting Saskatchewan CSC education through personalized, real-time feedback without overshadowing CSC fundamentals. While foundational CSC knowledge remains essential, AI-assisted ID offers practical tools for tailored assignments and automated grading that respond flexibly to diverse student needs. Automated grading also alleviates grading backlogs, allowing students to receive feedback when they are ready to submit assignments, thus reducing the impact of arbitrary deadlines.

The chapter recommends a classroom design and soft-tech approach that supports ethical AI use, with all students working visibly in a shared orientation that allows teachers to monitor and guide AI use responsibly. Transparency and academic integrity are prioritized: students submit AI-generated outputs for review to ensure tools are used for support, not as shortcuts to correct answers. Through this hard-tech and soft-tech marriage, teachers can foster a culture of trust, where emphasis on the learning process outweighs punitive measures.

Link to video:

Acknowledgements

I acknowledge Dr. Dirk Morrison for assisting with the initial brainstorming process that transitioned my graduate studies journey from M.Mus (MusEd) to ETAD with a focus on CSC, and Dr. Paula MacDowell for ‘installing the trampoline’!

Open Researcher and Contributor ID (ORCID)

Brett William Balon https://orcid.org/0009-0001-5777-1389

Brett W. Balon (B.Mus, B.Ed) is an M.Ed graduate student at the University of Saskatchewan and has been teaching band, music, English, history, guitar, information processing, and computer science in Saskatoon Public Schools at the collegiate level since 2006.

References

Balon, B. (2024). But one day earlier: Confronting the dragon-tyrant in Saskatchewan computer science education. In P. MacDowell (Ed.), Proceedings of the Educational Technology and Design (ETAD) Summer Institute, Saskatoon, Canada. https://hdl.handle.net/10388/15993

Casal-Otero, L., Catala, A., Fernández-Morante, C. et al. AI literacy in K-12: a systematic literature review. IJ STEM Ed 10, 29 (2023). https://doi.org/10.1186/s40594-023-00418-7

Chaushi, A., Chaushi, B. A., & Ismaili, F. (2024). Pros and cons of artificial intelligence in education. International Journal of Advanced Natural Sciences and Engineering Researches, 8(2), 51-57. https://as-proceeding.com/index.php/ijanser/article/view/1696

ChatGPT. (n.d.). AI in instructional design [Data]. https://chatgpt.com/share/66fb2000-c314-800f-ab04-f1e4a17149a2

ChatGPT. (n.d.). AI use in education [Data]. https://chatgpt.com/share/66fb2879-ce50-800f-80a1-b266eaffdec9

ChatGPT. (n.d.). Approaches to AI in learning environments [Data]. https://chatgpt.com/share/66fb2227-ae70-800f-b80e-41899e788065

ChatGPT. (n.d.). Ethical AI in education [Data]. https://chatgpt.com/share/66fb2915-34e8-800f-a04b-497cd9229909

Casal-Otero, L., Catala, A., Fernández-Morante, C., Taboada, M., Cebreiro, B., & Barro, S. (2023). AI literacy in K-12: A systematic literature review. IJ STEM Education, 10(29). https://doi.org/10.1186/s40594-023-00418-7

Hardman, P. (2024). How close is AI to replacing instructional designers? Substack. https://open.substack.com/pub/drphilippahardman/p/how-close-is-ai-to-replacing-instructional-e6c

Mollick, E., & Mollick, L. (2023). Assigning AI: Seven Approaches for Students, with Prompts. The Wharton School Research Paper. http://dx.doi.org/10.2139/ssrn.4475995

OpenAI. (n.d.). Sharing & publication policy. Retrieved from https://openai.com/policies/sharing-publication-policy

Python. (n.d.). Acceptable use policy. Retrieved from https://policies.python.org/pypi.org/Acceptable-Use-Policy

Saskatchewan Ministry of Education. (2018, January). Computer science 20 curriculum. Retrieved from https://curriculum.gov.sk.ca/CurriculumHome?id=446

Saskatchewan Ministry of Education. (2018, January). Computer science 30 curriculum. Retrieved from https://curriculum.gov.sk.ca/CurriculumHome?id=444

Selwyn, N. (2024). On the limits of artificial intelligence (AI) in education. Nordisk tidsskrift for pedagogikk og kritikk. https://doi.org/10.10.23865/ntpk.v10.6062